LLMs are legit, but they’ll still cause a bubble

LLMs are by no means like crypto. They are not a fad or an uncooked tech that isn’t ready to be deployed in the real world yet. LLMs may not be AGI but they are legit. But that isn’t mutually exclusive with being a bubble either. Even tech that definitely will change the world isn’t immune to being hyped beyond its capabilities. LLMs will add a lot of value to users, more than we can imagine right now, but they will also get way more investment than their value to end users will warrant, and that will only end with a seismic market correction

How would a bubble even form?

A bubble is just when people think something will add more value to society than it actually does. They may even be right eventually, but the killer is that they overestimate the tech’s capabilities at that point in time or in its current form.

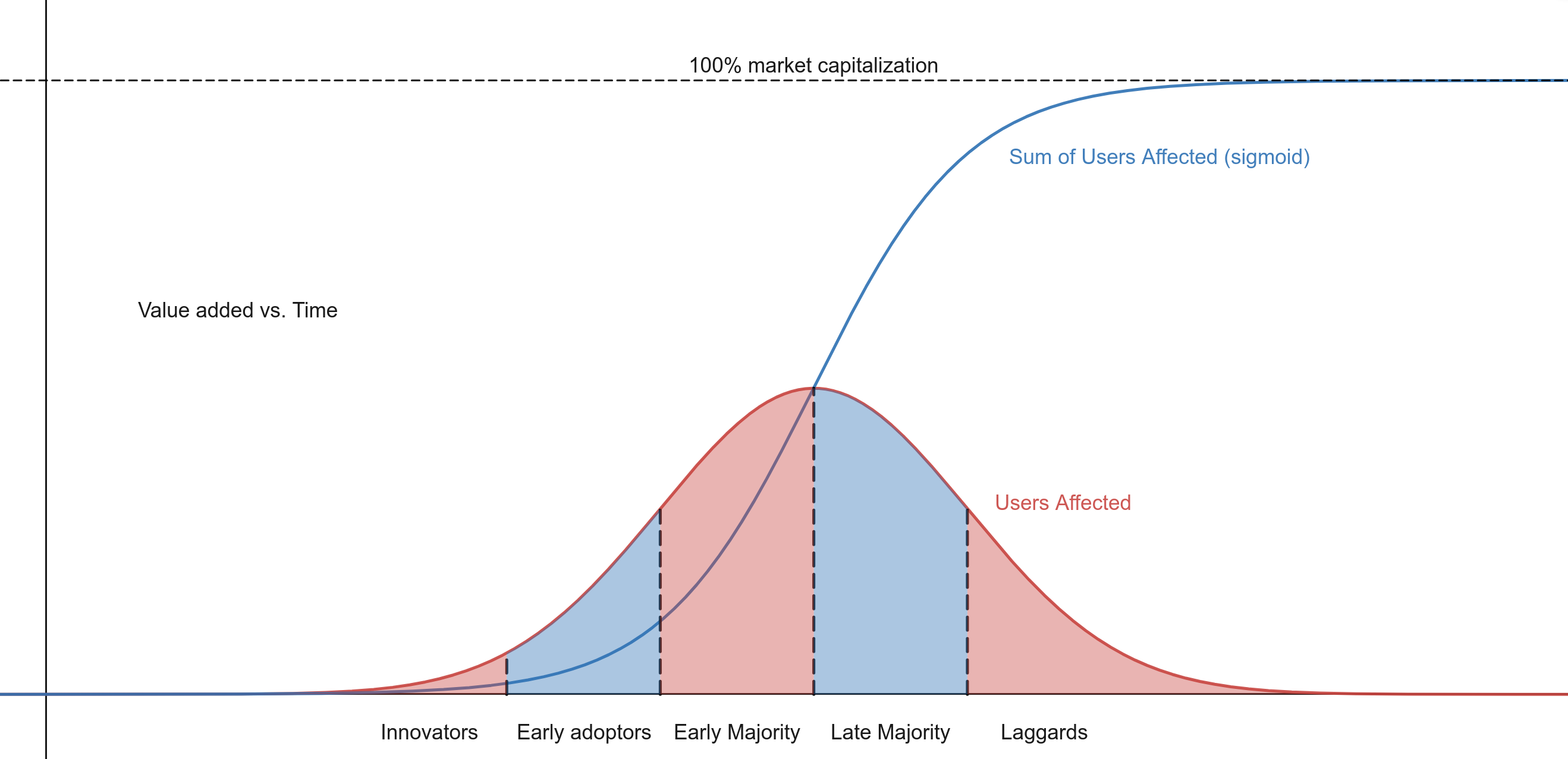

For reference, here’s a graph that shows how a useful new innovation spreads throughout society over time to add value.

The x axis is time and the y axis is some measure of value added to society, here I’ve chosen users. Not all users will adopt a tech at the same time, there is no innovation that instantaneously becomes the status quo, not even ground-breaking ones. A small number of users will adopt early, most will adopt late, and a sizable chunk will lag behind. This happens until the total value added follows a sigmoid curve, until the innovation adds all the value it can and stops growing in influence because it’s already everywhere it can be.

That last part is what people forget. Investors forget that society is finite and any tech, no matter how good, takes time to diffuse throughout the market, will eventually saturate it.

But everyone already knows this

Every one does know it. But everyone doesn't.

Nietzsche once said that “Madness is the exception in individuals but the rule in groups,” and Tommy Lee Jones once said “A person is smart. People are dumb”.

What happens is that a gold rush forms. People start foaming at the mouth to get in on the incredible investment opportunity that a revolutionary innovation provides. People start to get a Fear Of Missing Out (FOMO) until they start thinking emotionally rather than rationally.

And billionaires and multi-millionaires are not immune to this. They may not work normal jobs like you or me, but they still have to work to manage their money, and they stress out about that as much as we do about meeting deadlines and such. Investors know they have to get in on the next big thing or they are failures at their job. And in a world where college kids are running their mouths against blue chip investment firms, it’s even more embarrassing.

The investment market FOMOs, they act stupid, and stupider the market acts, the less sure everyone else becomes that it’s stupid. Ambitions are sky-high, so activity has to increase, or how else will you achieve those goals. Wishful thinking takes over and people start to expect the market to be larger, the cap to be bigger, because wouldn’t it be nice if it was? Expectations begin to diverge from reality.

You can already see this happening with all this talk of LLMs being AGI.

Investors look at data. They don’t just go by feeling

“If you torture the data long enough, it will confess to anything” - Ronald Coase

A lot of times, data isn’t looked at properly. Or the insights from data are ignored if they aren’t convenient. Or incorrect insights are taken as gospel because they are convenient. Or, like the quote above predicts, the data is subconsciously massaged until it confirms pre-existing biases.

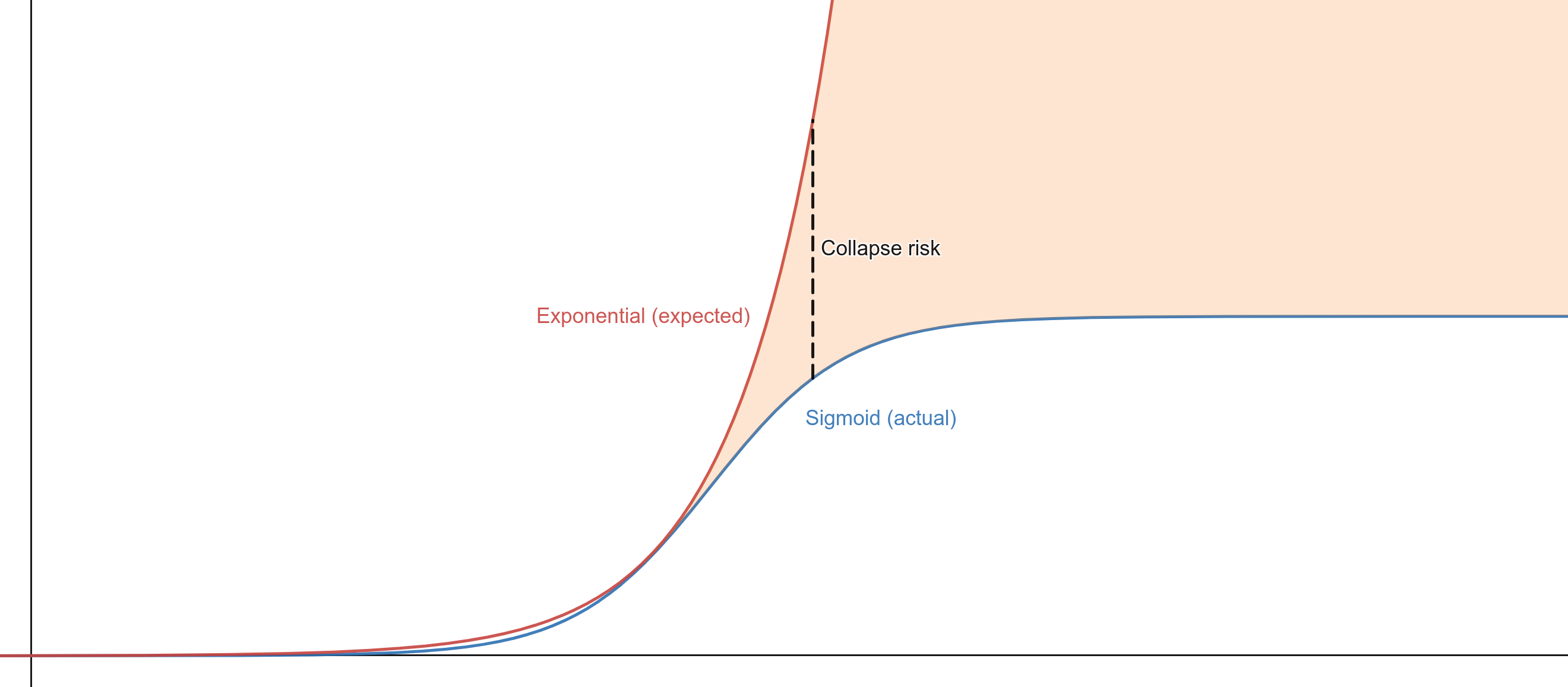

Institutional investors aren’t as sophisticated as one would think. They make decisions based on experience, sure, but that often hides the same biases and logical fallacies that everyone is subject to. The one at play here is the fallacy of looking at the first half of a sigmoid process, and assuming it’s exponential. Here’s a graph for reference.

The blue line is the same sigmoid curve we saw before, the total impact an innovation will have on society over time. The red line is an exponential curve.

You will notice that if you are in the middle of when the innovation is taking place, especially right before the inflection point where spread starts to slow down, then the reality matches that exponential curve pretty well. So if you just assume that this tech will just keep growing, that it will be a rocket ship that goes to the moon, then you can achieve all your wildest dreams by just buying in. and it’s all backed by the data.

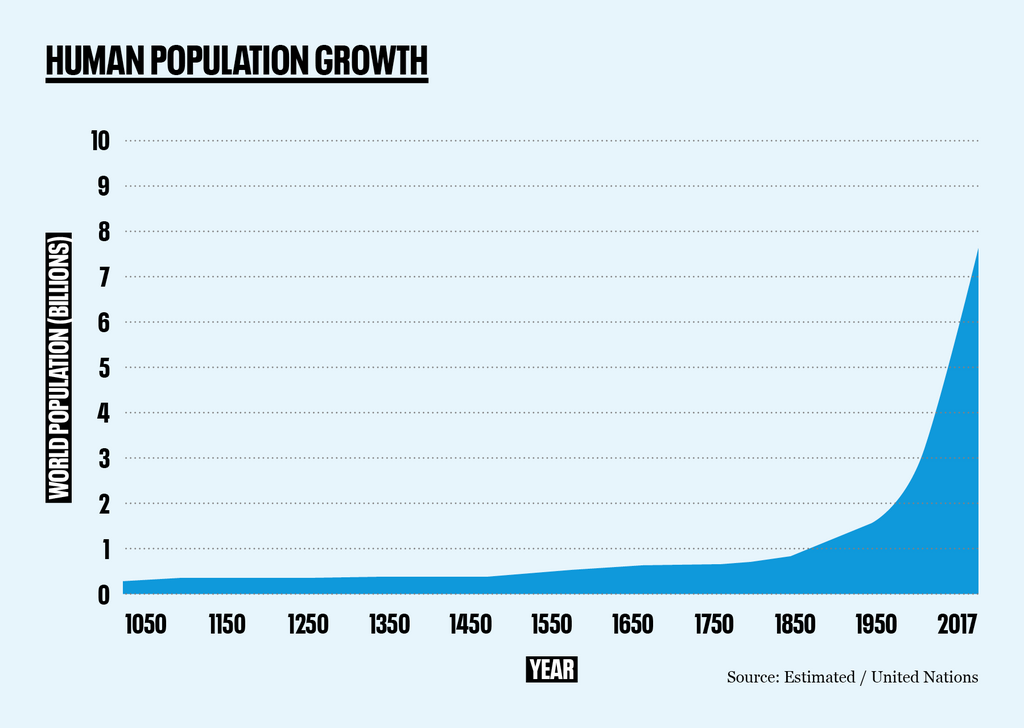

This fallacy is pretty common. A more pessimistic example is when a few years ago everyone started freaking out about overpopulation. Obviously mis-utilizing our resources is an issue but people started to think that population numbers would just keep growing exponentially until we wouldn’t have land to stand on. Population growth follows the same sigmoid model that innovation does, and so when people see graphs like this:

They let their pessimism (or ecofascism) take over and assume the worst case scenario, an exponential curve. And the worst part is that it looks like it fits pretty well.

So what will this look like?

Probably like the dotcom bubble, since that’s the last bubble we had based on a legitimate innovation.

It’ll be very nice at first. Lots of jobs, lots of investment being thrown about, lots of choices for consumers. You’ll be able to use products that are being sold at a loss, because the company thinks that makes sense. If you wear a nice suit and get a nice haircut, you could scribble a garbage business plan on a napkin and get millions in funding, be the same gender, ethnicity, and socio-economic class as the investor and you might not even need the napkin.

Expect to see a lot of companies that are just front-ends for OpenAI’s API, which is stupid because the future of LLMs is open source and locally hosted. The amount of money in LLMs will be stupid, and getting it will be stupid easy.

But it’s no secret that bubbles pop.

In the exponential versus sigmoid graph above, you’ll see that I shaded in the area between the two. At any point in time, the difference between reality and expectation is like an elastic band that's pulling the market down. The larger the delta the harder it will pull the market down to reality. Companies won’t make as much money as they thought they would. Competitors will cannibalize each other’s operations. And then some critical event will happen to make investors realize that they’re throwing money into a pit, and they need to cut their losses. That’s when the market will painfully snap from the fantasy red line, down to the cruel reality of the blue line

Just like the dotcom bubble, there will be a lot of people who had specialized in a field only to find demand drying up. So Prompt Engineers will be what WebDevs were in the early 2000s.

But websites were a legit tech and they did survive and thrive after the market correction. So will LLMs and generative AI. The world as we know it will change as much as it has since the 1990s. We will see these technologies weave their way into our society in unpredictable ways and that still involve a lot of money for those who build and deliver it.

The long term future of the Generative AI market is safe, stable and prosperous. The short term will be stupid.

TL;DR

- Legitimate value and bubbles are not mutually exclusive

- The innovation diffusion cycle grows along a sigmoid curve

- People overfit an exponential curve to its first half

- The talk of LLMs being AGI is an example of this

- This is similar in nature to the dotcom bubble and will play out the same way