Of course LLMs aren’t AGI, but the question itself is a huge compliment

The release of ChatGPT has sparked a huge debate amongst tech enthusiasts as to whether LLMs are or could soon become AGI. I personally think it’s obvious that they aren't yet and probably won't be in the near future.

But I don't want to bring LLMs down a peg; in fact I want to bring it up a peg. In the group of people who doubt if LLMs are AGI, there are some people who doubt if LLMs are a revolutionary technology at all. That’s just ridiculous, and I want to talk about that.

Who moved my goalposts?

The reality of the situation is that LLMs accomplished their actual purpose so well that people now take it for granted. LLMs exceeded expectations by so much that people subconsciously had to graduate to the new expectation of “But is it AGI though?”.

Imagine a 10 year old playing in a children’s baseball game and absolutely dominating. Just hitting nothing but home runs to the point where the opposing team knows they have no hope of coming back. It's obvious to everyone that the mercy rule will have to be applied to end the game early and put the opposition out of their misery. Now imagine one of the people in the stands saying “yeah but he couldn’t have done that against the Yankees”.

That's what some of the discourse around LLMs being AGI is.

So what did LLMs accomplish?

Plain and simple? They solved NLP. completely.

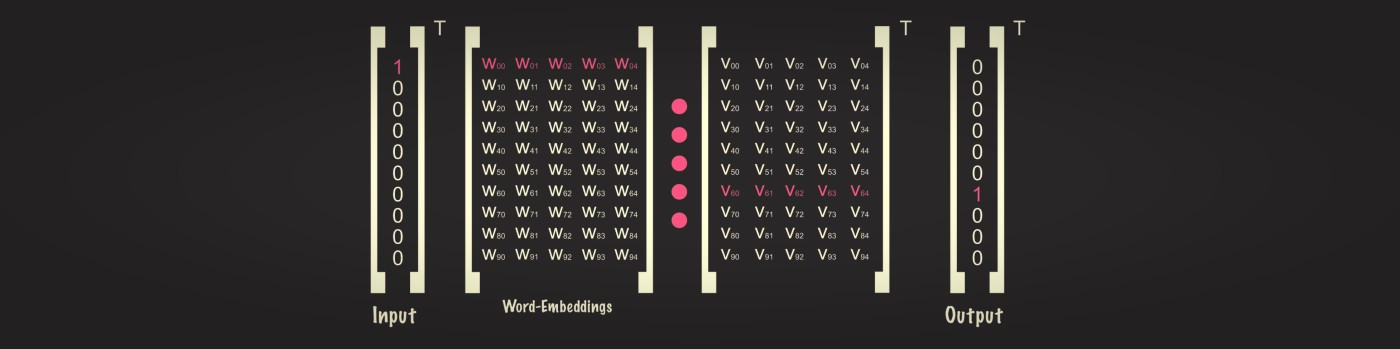

You need to understand the world of NLP before LLMs. An NLP engine’s capability was informally benchmarked by what age of human its understanding most closely resembled. So word2vec, with its basic understanding of semantic word associations, was like a 2 year old just learning what words and language even were. BERT, with its slightly more advanced but still basic understanding of sentence structure and named entities and such, was like a 5 year old that can follow what you're saying if it's simple enough, but can’t respond with any sophistication.

GPT-3 is an adult. You can have a conversation with it. You can ask it questions like you would a person and it will always respond like a human would. It might not be factually correct. It might straight up lie. But it’s undeniable that it has a complete and perfect mastery over parsing what the question was referring to, and how to structure a coherent and valid response.

This is the core of NLP. and LLMs have cracked it.

But they’re wrong sometimes

Being factually correct about its environment was never an expectation of NLP models, it's an expectation of AGI.

If you ask ChatGPT to write you a tutorial on an algorithm to balance a binary tree and it gets the actual logic of the algorithm wrong, then that only proves that it’s not AGI. The fact that it understood what a tutorial was, and that you wanted one to be constructed, is astounding. It could even write the tutorial as though a pirate was giving it, all you’d have to do is tell it to do so. This is something nothing before could ever have hoped to accomplish.

The fact that LLMs are able to do simple addition, using no labeled data at all, just a large unstructured corpus of natural language and the two unsupervised tasks of learning an embedding and next word generation, is amazing. It’s genuinely stupefying and it would be so even if it got all the sums wrong because it still demonstrated such a deep understanding of natural language that it was able to discern that the input it received was a query and it offered a valid (incorrect, but valid nonetheless) response.

Conclusion

The fact that LLMs have solved NLP is astounding and the fact that the ML does not need labeled data to do it is astounding. It's not just a huge achievement, but it’s also going to fundamentally change the way we do things. Not to the degree AGI will, but it will still be seismic.

TL;DR

- LLMs aren’t AGI

- But even asking that is missing the whole point

- LLMs completely solved NLP

- That's definitely going to change the world